I have been looking at AI as a thinking tool. Not as something to generate content (which I think it is essentially lousy at), but as an aide to thinking. I heard a number of people at conferences and elsewhere talk about how AI is like a calculator and how calculators were supposed to be the end of math. What calculators really did was get rid a lot of grunt work with the pencil and allowed people to think about steps and processes instead. It is the difference between writing with a pen in a notebook and word processing. Back in the 90s an uncle of mine described the word processor as the chain saw of writing world. (He grew up in Minnesota in the 30s and spend a lot of time chopping wood with an axe.) Imagine if someone was given a chainsaw and started using it as an axe? That person would chuck the new tool and go back to using the old – and they should. We are in a similar place with AI: I don’t think we are using it right, at least, not quite yet.

I have been looking at AI as a thinking tool. Not as something to generate content (which I think it is essentially lousy at), but as an aide to thinking. I heard a number of people at conferences and elsewhere talk about how AI is like a calculator and how calculators were supposed to be the end of math. What calculators really did was get rid a lot of grunt work with the pencil and allowed people to think about steps and processes instead. It is the difference between writing with a pen in a notebook and word processing. Back in the 90s an uncle of mine described the word processor as the chain saw of writing world. (He grew up in Minnesota in the 30s and spend a lot of time chopping wood with an axe.) Imagine if someone was given a chainsaw and started using it as an axe? That person would chuck the new tool and go back to using the old – and they should. We are in a similar place with AI: I don’t think we are using it right, at least, not quite yet.

As an example, I asked AI to generate guitar tablature for the tune “Happy Birthday.” It produced some tab. I then asked it to rewrite it in the form of one of Bach’s two-part inventions. And it did. It even explained the voices and the process. The problem was that it did not know the tune to “Happy Birthday” – all it produced was gibberish. Its original tune for “Happy Birthday” was wrong and the two-part invention was just as bad. What was absolutely spot on was the explanation of the process of creating a two-part invention. If I had the time, I could have fed it the corrected tune, added some chords and fixed it. I have countless examples of AI getting fundamentals and facts wrong: if I put in a chapter of an OER textbook as ask it to create 20 multiple choice questions, it may ask “What color is the table header on page 25?” if it feels the chapter is a little thin. So, in my experience, it is good at processes not facts.

Picasso once commented on computers: “But they are useless. They can only give you answers.” So when you are working with AI, getting the “answers” is really the wrong way to use it, although in some limited ways, it can do that since it is also trained on Wikipedia. What it can really do well is to understand and express information through processes. I started thinking about what I do to think about ideas and what problem-solving methods I use. In my ABE and English classes, I would have students use Rogerian and Toulmin methods to analyze arguments. I would also use alternative problem-solving techniques such as Synectics. In short, I am interested in anything that helps my students analyze arguments apart from classical debate that creates “right and wrong” and “winners and losers.” I think we are all too familiar with why these are necessary skills to teach. Jennifer Gonzalez has a great list of alternatives to traditional debate. She focuses on speaking and listening which are lost arts. Maybe some of these non-traditional methods could be used in AI to help students think about problems in new ways.

While revisiting all of this, I ran into Kye Gomez’ “Tree of Thought” prompts. If you have not head of this it is an approach to problem-solving that aims to map out the different paths and potential solutions to a problem, structured similarly to a decision tree. This method is grounded in the principles of cognitive science and systems thinking, where the emphasis is on understanding and navigating the complexity of thought processes by visualizing them as interconnected branches, each representing different possible outcomes and actions. This approach is particularly relevant in complex problem-solving scenarios where traditional linear thinking may fall short.

The method involves breaking down a problem into its core components and exploring each branch’s possible decisions and outcomes. This helps in understanding the problem from multiple perspectives and encourages a comprehensive analysis of potential solutions. By visualizing thought processes as a tree, individuals can systematically evaluate the implications of each decision, leading to more informed and strategic choices.

Here are some key aspects of the “Tree of Thought” method:

- Pattern Recognition: Recognizing and organizing different types of information and knowledge to form coherent patterns.

- Iterative Learning: Continuously refining and adapting thoughts based on new information and feedback.

- Non-Linear Thinking: Moving away from linear, step-by-step problem-solving approaches to more dynamic and interconnected thinking.

- Knowledge Flow: Understanding that knowledge is not static but flows and evolves, requiring flexible thinking structures.

In the context of problem-solving, the “Tree of Thought” method serves as a powerful tool for navigating complexity and making informed decisions based on a holistic view of the problem space. Mind you, this is meant to be training the AI to think, but teaching students these prompt engineering techniques can bring critical thinking to a whole new level. What I like about it is that it can replace the “debate” kind of thinking and allow contending voices around a problem to come to some kind of agreement about what the problem might be, who or what is affected by the problem, and what solutions might arise from shared understandings rather than the winner/loser paradigm of traditional debates. For students, imagine using it to test Stephen Downes’ Guide to the Logical Fallacies which I have used with my English 101 students. One could create a Tree of Thought where two of the “experts” engage in one or more of the logical fallacies and one does not. We could also add to the GPT’s knowledge bank something like Nikol’s Thirteen Problem Solving Models as well.

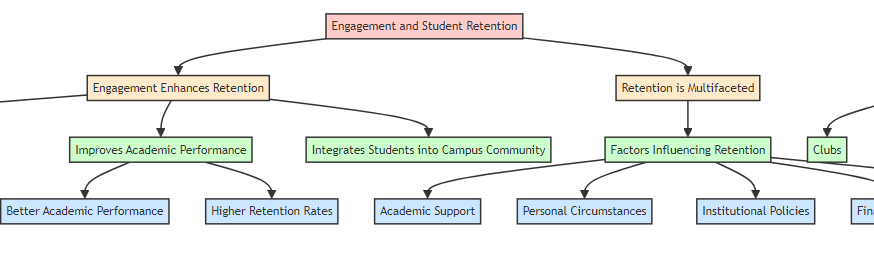

In this case, I went into chatGPT 4o and put in the prompt: Imagine three different experts are answering this question. All experts will write down 1 step of their thinking, then share it with the group. Then all experts will go on to the next step, etc. If any expert realises they’re wrong at any point then they leave. The question is “What is the connection between student engagement and retention? and it gave me three well-thought out perspectives and some shared conclusions. I thought it was really useful but it seemed dense and complex. I wanted to separate out the arguments. I asked it if it could put the arguments into a concept map. It said it could and gave me an outline that I could use to build a concept map. That is fine, but it occured to me that ChatGPT can output code so I asked it for the concept map to be put into HTML5 so I could visualize the arguments. It did it. I next asked for the different levels in the map to be color coded and it did that as well.

I started thinking about this: my English students could do the same thing with any of their readings. Any information, article or book can have a lens of information visualization applied to it (anything in the Periodic Table of Visualization Methods, for instance), and allow the students to get a toe-hold into different ideas in ways that they understand best.

- Downes, Stephen (2024) Stephen’s Guide to the Logical Fallacies. www.fallacies.ca

- Gomez, Kye. (2023) Tree of Thoughts prompts. GitHub.

- Gonzalez, Jennifer (2015) The Big List of Class Discussion Strategies. Cult of Pedagogy.

- Lengler, Ralph and Eppler, Martin J. (n.d.) A Periodic Table of Visualization Methods. v.15. visual-literacy.org.

- Nikols, Fred (2020) Thirteen Problem Solving Models. Distance Consulting LLC.

- Yao, Shunyu, et al. (2023) Tree of Thoughts: Deliberate Problem Solving with Large Language Models. arXiv:2305.10601. Cornell University.