“Things are not as they are seen, nor are they otherwise.” – Lankavatara Sutra

In education, there has been increased discussion of Artificial Intelligence because of the growing interest in Learning Analytics by education institutions. Learning Analytics requires a lot of examination and this is not really that post. I am interested in what we think we are talking about when we use terms like “intelligence.” How we define “intelligence” can make a huge difference in how we think about AI. Stephen Downes recently posted a link to the article “What AI Cannot Do” by Chen Qifan and Kai-fu Lee. Qifan and Lee say that AI will not be able to be creative, empathetic, or dexterous. The tenor of the article is upbeat and concludes that “…AI will liberate us from routine work, give us an opportunity to follow our hearts, and push us into thinking more deeply about what really makes us human.” While this is a noble pursuit, I would push us first to understand the whole idea of intelligence in the first place.

I am not a fan of phrases like “artificial intelligence.” Intelligence can’t be “artificial” or it is not intelligence. I apparently have a very specific definition of “intelligence.” One thing I do know is that by using phrases like “artificial” (or “virtual”) we remove ourselves from that which is being declared artificial or virtualized. The artificial seems to be more ethically malleable than the real. It is a separation, an artificial one, that shifts us one step away from any ethical responsibility. (Just look at Zuckerberg or Bezos if you doubt this). We abdicate our responsibility for what happens in the virtual or what an artificial intelligence might do with our data in the name of whatever benefit (whether pedagogical or monetary) when there is nothing really “artificial” about artificial intelligence.

Let’s take a closer look at the term “artificial intelligence.” I am still trying to figure out what is artificial about AI and what the intelligence might be. Is the artificial bit supposed to be its non-humanness? It is created by humans using human language and human algorithms, hosted on human servers and directed to particular human ends (marketing, surveillance, etc.) via human agency. What makes this “intelligence” and not just a complicated tool? But what then is intelligence? Looking in all of this for a definition in AI is difficult.

The Oxford Dictionary defines intelligence as “The faculty of understanding; intellect…a mental manifestation of this faculty, a capacity to understand.” That definition feels like they are just passing the buck to another ill-defined word: understanding. But a “capacity to understand” implies that the knower knows that they know (Steven Connor covers this brilliantly). Machines can find, record, and store information but can they understand it? Do they know its meaning? Will they ever know that they know? And if they did, what purpose would that serve?

John Searle claimed in his 1999 book Mind, Language, and Society that the “…appropriately programmed computer really is a mind, in the sense that computers given the right programs can be literally said to understand and have other cognitive states.” Here we can only imagine what “appropriately programmed” means: and what are “the right programs”? The literature of AI is filled with promises of future sentience. I love that phrase “can be literally said to” – it is so passive – they can also be literally said to not understand. And what defines a “cognitive state”? What if the mind is not a collection of specific mechanistic or chemical conditions? The “mind” (thinking, feeling, knowing, etc.) might be a process of the whole body and environmental gestalt. Most of these definitions imply some kind of definition of the mind or consciousness that we don’t yet actually have.

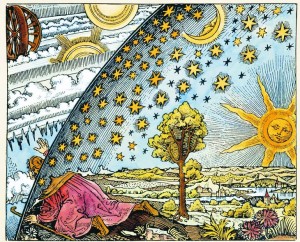

Getting back to Qifan and Lee: can AI create? Shouldn’t the first question be why do humans create? We have to know what creativity is and its connection to intelligence before we make a simulacrum of it. This entire problem swirls around our inability to define things such as “intelligence.” What is it to be creative? It is not the production of art but again, the desire to create. We create in an attempt to mediate our relationship with existence, the world, and others.

The point that Qifan and Lee make about dexterity is interesting because it is not really an intelligence problem but an engineering problem. Engineering problems are easier to solve than epistemological ones. Their idea is that AI will not be able to perform surgery because of a lack of dexterity. With that said, I am looking forward to reading about the lawsuits around the first AI directed heart surgeries. We seem to be stupid enough to let AI drive our cars, why won’t we eventually let robots operate on us?

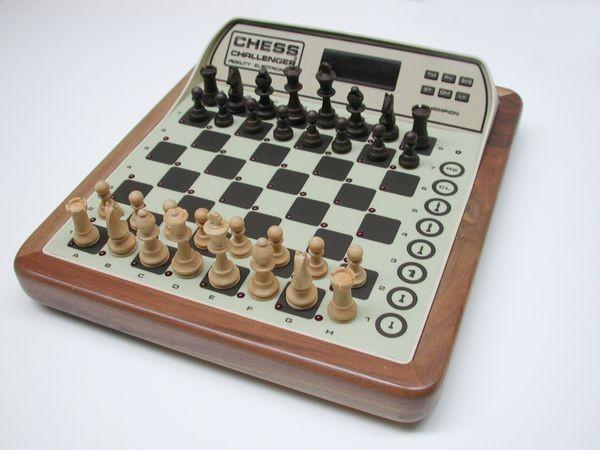

What is the motivation behind AI? It is one thing to have a database of chess positions, it is another thing to actually want to play chess. I had little interest in whether Deep Blue could “beat” a chess grandmaster. I am more interested in the fact that there are humans yoked with all of the distractions and obstructions to sustained mental analysis that can play at the grand master level and play against other grand masters and even beat the occasional chess computer. I used to beat the “Chess Challenger 7” because I quickly figured out which kinds of chess openings were part of its programming and which weren’t. Deep Blue was a database and an algorithm that was meant to review every possible move and make the statistically correct move every time. Deep Blue can’t do anything else: it can’t get insomnia, drink too much the night before, get angry, be afraid, go through puberty, have bad dreams – experience all the things in the world that prevent sustained analysis. Without the obstruction of shared human failings, Deep Blue is not really interesting to me even though how Deep Blue won and lost (and was dismantled) are instructive. We write poetry and play chess because of the obstructions that each one of those disciplines creates. Both require a level of focus and sensitivity to accomplish.

Artificial intelligence is actually a crude mechanism and a subset of human “intelligence.” AI does not “know” things: it can gather data and process it, but it is never aware that it knows something. In order for something to be known there must be a knower. This is why knowledge can not be stored in a non-human appliance. Information can be stored, but knowledge is the engagement and use of that information. This requires awareness. All of our definitions of knowledge require some kind of awareness. We leave ourselves in a terrible ethical bind when we abandon that notion of intelligence: we are either assuming that awareness is not needed for knowledge or we are assuming that the algorithm itself is aware. In either view, we dislocate human agency, and through that, abdicate our own responsibility for the actions we set in motion when we release AI into the wild.

Intelligence requires the engagement of obstruction and the presence of a motivation. I think this is what Aristotle was getting at when he said in the opening of the Metaphysics “All men desire to know.” This is the motivation: we are curious – we use our senses to engage with the world and our brains have evolved to look for similarities, differences, utility, principles – all kinds of things run through our head as we attempt to make sense of the world. All of this constitutes what Nietzsche called “perspective” – what we think we know is subjective because we process the world with our unique experience as well as the senses.

I am not a fan of how many questions are in this posting. But I am not a fan of how few questions educators are posing to those currently touting VR and AI as solutions to problems in education: especially when they are promoting these tools as a “brain science” approach to education. For too long education institutions have accepted the responsibility for student safety, accessibility, and data security. This needs to be put back on to the companies that are building these tools by creating purchasing rubrics that address safety, accessibility, and data security.