I am at Achieving the Dream’s Dream 24 conference with a team from Clover Park Technical College in Orlando, Fl. I am interested in looking at highflex models from the instructional design perspective. These are my notes for colleagues – they are impressionistic and represent my own intrests as an instructional designer – your results may vary, contents may settle during shipping.

I am at Achieving the Dream’s Dream 24 conference with a team from Clover Park Technical College in Orlando, Fl. I am interested in looking at highflex models from the instructional design perspective. These are my notes for colleagues – they are impressionistic and represent my own intrests as an instructional designer – your results may vary, contents may settle during shipping.

From the program:

“Century College’s Multi-Modality Model addresses student needs for flexibility and high-impact learning, increasing access and equity for all students. Al multi-modality classroom is where a faculty member uses three modalities (face-to-face, online synchronous, and online asynchronous) to teach a course and the key component is student choice, where students can choose any modality on any given day throughout the semester to fit their schedule and learning style preference. Resulting from lessons learned during and affter the pandemic, college leadership employed and shared governance structure where faculty and administration worked collaboratively creating an innovative model that includes professional development, student supports, state of the art technology, and more! A robust data-driven assessment showed strong student outcomes, narrowing of equity gaps, and advancement of teaching and learning. Participants will explore how this model can be successfully implemented at their own institutions.”

Century College is in Minnesota – a career and tech college. 11,000 students, 169 degrees, diplomas, and certificates. 45% students of color and 50% first in family to college.

They invested 4.4 million into 103 classrooms, 10 labs, and 21 student gathering spaces.

They discussed institutional buy-in. Multimodal classes provide flexibility to students.

One of the first speakers was the faculty union leader. 60-70% full-time faculty – this makes buy-in all the more important. There is a faculty shared governance process. Communication was the key to faculty buy-in. They had trouble implementing online learning because of the lack of support and faculty buy-in. They needed to provide the tools and support to make it work.

This started with a student who was a mother who couldn’t make it to class so an instructor used Adobe Connect to live stream the course for that student. The students’ need for flexibility.

Benefits: Increased access, increased enrollments and student success.

Challenges: Support for faculty and workload, support for students, high quality captioned videos, communication and logistics.

Structure:

- Students choice for three modalities

- Faculty training

- Classroom tech support

- Resources to support students

- Assessment for improvement

Planning for implementation was discussed with a two-year timeline. Faculty get paid for professional development, and faculty have to go through the training in order to teach. Teaching and learning center, student workers, and IT working together.

They contracted with a leader in the highflex model (Beatty) from San Francisco State. They took what they learned from him and built their own after.

Technology:

- Front camera

- Speakers

- On-call IT support

- Tech assisstant

- Room microphone

- Podium microphone

- Projector

- Assisted hearing device

- Wireless lavalier mic

This took a lot of training to get it to work for faculty.

Changes in course design. The faculty member said “go paperless” – use the LMS for everything even if you are teaching face-to-face. It functions as one community – you are not teaching three classes simultaneously. They use tools like Perusal which is a social annotation tool. Clear, transparent assessments and rubrics that are provided ahead of time ensures that all three modalities are assesses equitably.

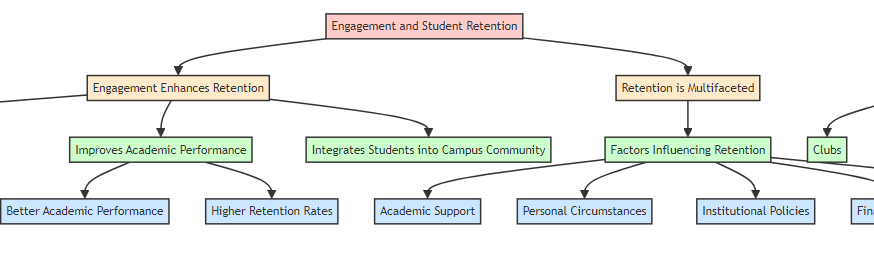

Students enrolled in multi-modality sections have higher pass rates than hybrid and online sections of the same course and had pass rates comparable to f2f sections. It helped narrow the equity gaps as well.

To implement:

Include research and best-practices review, collaborative discussions with faculty and admin, get student feedback, develop model based on student choice, be willing to invest.

__________________________

I am interested in this because of COVID and also because we did this 12 years ago at Tacoma Community College for the Health Information Management courses. Those students included those already working in the health field, some were parents, or employed elsewhere. We did a very simple model using the LMS in conjunction with Elluminate (like Zoom), and a live phone connection.